Introduction

For about half a century we have been living in a world where the speed of computers grew at an exponential rate. This is known as Moore’s law, which is actually an observation of Gordon Moore that the number of transistors in an integrated circuit doubled approximately every two years. But today, that rate has almost levelled off. And Moore’s law is not the only exponential that has come to its end. The same is true for Dennard scaling. In networking we are also hitting limits. We have reached the Shannon limit in optical communication. And in network ASIC design we reached the limit of serial bandwidth I/O. The next sections explore the current challenges and the final sections describe R&D that is exploring ways to overcome these challenges.

The End Of Moore’s Law And Dennard Scaling

For many decades the number of transistors on an integrated circuit grew exponentially. At the same time the power consumed by a transistor decreased, by lowering its operating voltage and current. As a consequence, the dissipated heat per transistor also decreased. Combined with Moore’s law, it luckily resulted in an almost constant amount of heat dissipated by an integrated circuit during all these decades. This is called Dennard scaling.

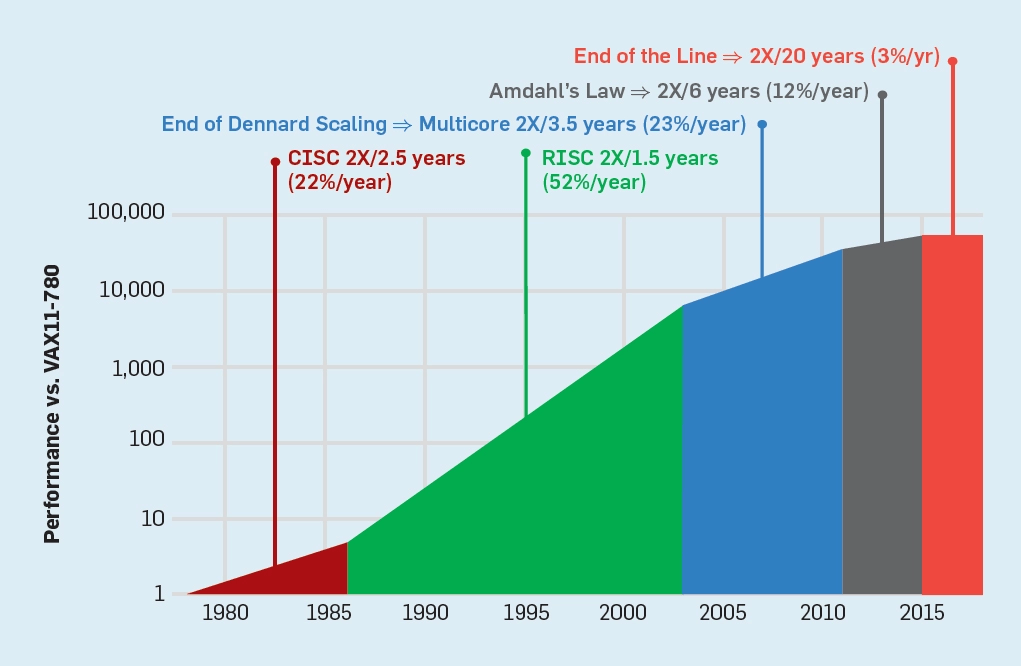

Moore’s Law (© 2019 CACM, Vol 62 No. 2, A New Golden Age for Computer Architecture, J. Hennessy, D. Patterson)

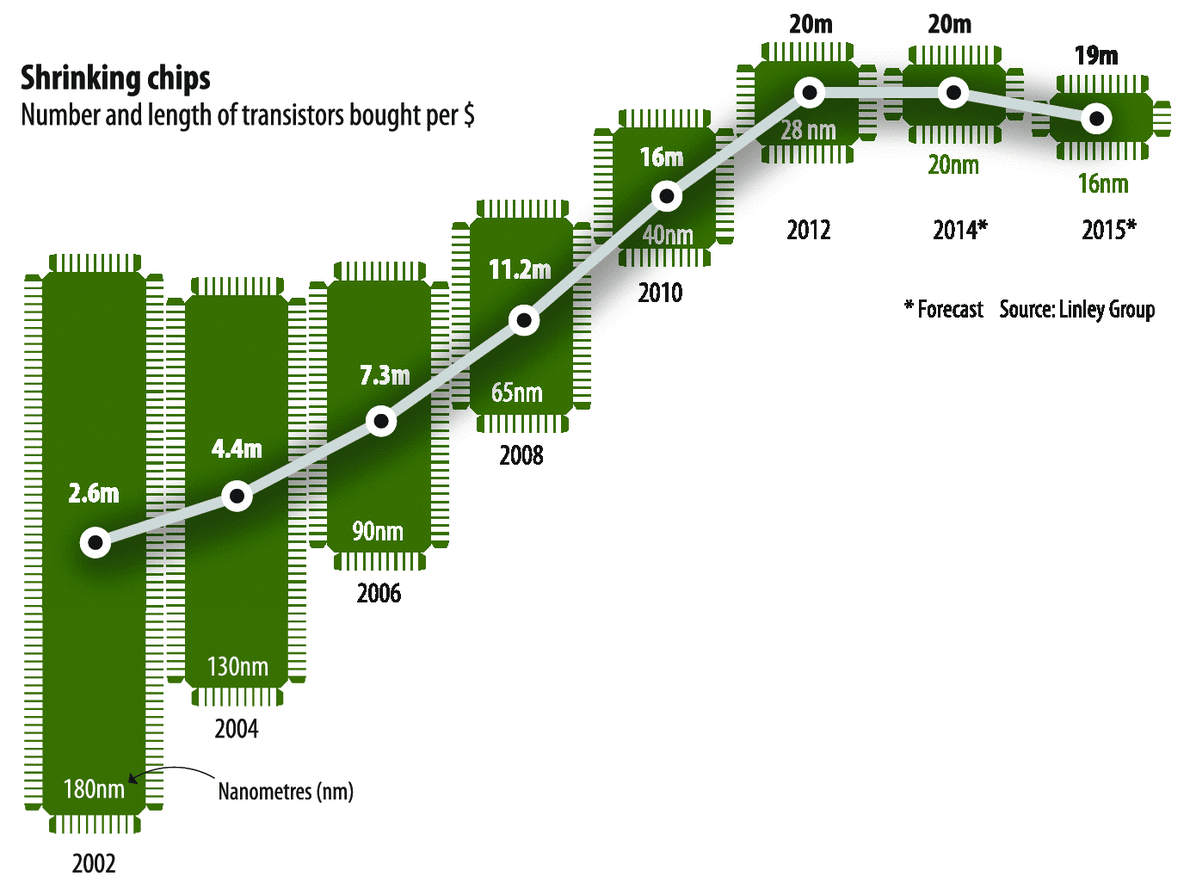

However, as the figure above shows, the era of exponential growth in performance has gone. Today improvements in CPU performance are very modest compared to what we were used to 20-30 years ago. We are also reaching physical limits. Transistors have become so small in size that manufacturing is getting very complex and expensive. The figure below shows that the number of transistors you can buy per Dollar is decreasing since several years.

Number and length of transistors bought per Dollar (Linley Group)

Reaching The Shannon Limit

In networking we are also running into boundaries. The Shannon limit imposes an upper limit to the amount of information you can send across a communication channel. This is dependent on the signal to noise ratio.

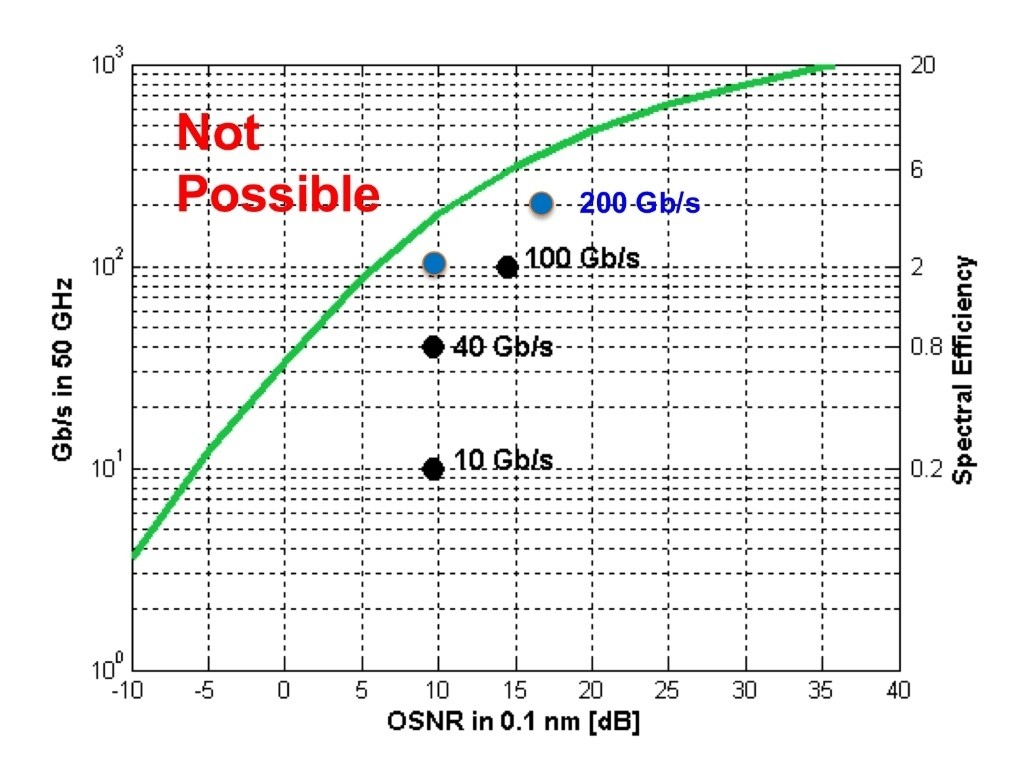

Optical transmission speeds in relation to Shannon’s limit (source: Ciena)

The figure above shows that we have reached that limit in optical fiber. In practice this means that you have to make a choice: transmit your data at a lower speed over larger distances, or transmit your data at a higher speed over shorter distances.

Limits To ASIC Serial I/O

Modern high capacity networking ASICs have many I/O ports. Many popular data centre switches these days have 32 ports at 100 Gbps. These all end up on the ASIC, but not as 100 Gbps ports but as (4 times) 25 Gbps ports (actually a 28 Gbps SerDes rate). So these ASICs have 128 ports which run an electrical signal at a rate of 28 Gbps. SerDes with a speed of 56 Gbps are starting to arrive. But the next step, 112 Gbps SerDes, is expected to be the last rate. After that manufacturing is getting much too expensive. This is because all these 128 ASIC ports need copper tracks on the circuit board to the front panel ports. Maintaining signal integrity of all those tracks is difficult and heat dissipation is also a problem.

Domain Specific Hardware And Software

Now that we have reached the end of Moore’s law, we need to find solutions. The 2017 Turing Award winners, John L. Hennessy and David A. Patterson, actually see a bright future for computer science. They called their Turing award lecture A New Golden Age for Computer Architecture. In their lecture they seek the solution in new chip architectures and bringing various disciplines together. That should result in a close cooperation between processor designers, compiler architects and computer language designers, and security experts. A new ASIC architecture should have security built in from the start (security by design). They also expect domain-specific architectures. A general purpose CPU architecture is too slow. We need to design domain-specific hardware and domain-specific software, tailored for the job at hand.

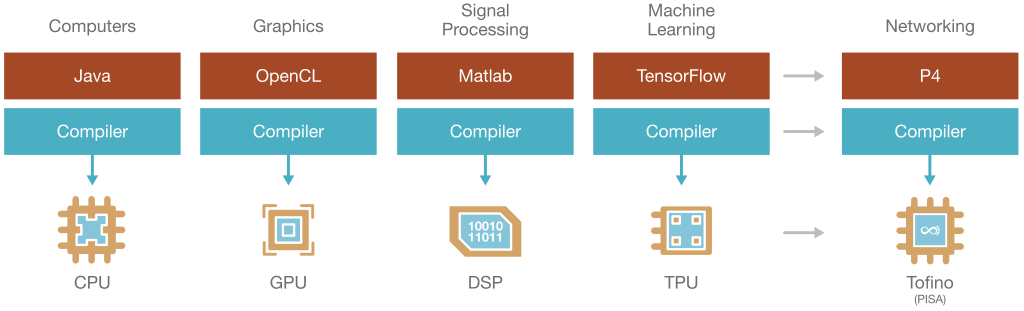

Domain Specific Hardware And Software (source: Barefoot Networks)

The figure above shows a couple of examples of existing domain-specific architectures. In my own work I have been working with P4 in networking for several years.

Silicon Photonics

Silicon photonics could be a solution for the ASIC I/O challenges. A main problem is the length of copper tracks between ASIC and front panel ports. That length can be reduced by replacing part of the copper track by an optical link.

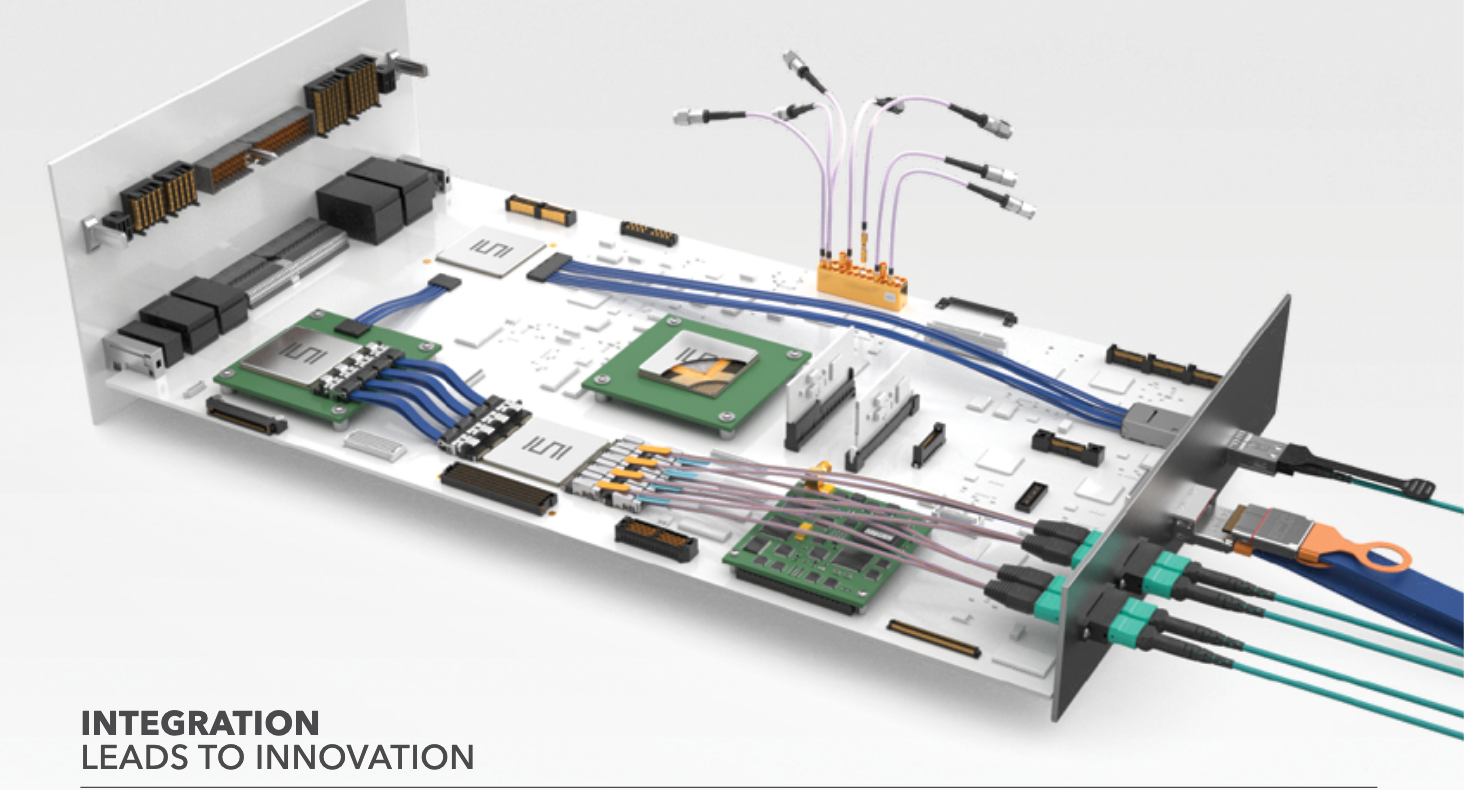

Optical link between ASIC and front panel port (source: Samtec)

The figure above shows a solution by Samtec. An electrical to optical chip is placed close to the network ASIC. Fiber connects that chip to optical front panel ports. Silicon photonics integrates optical lasers on the chip and lowers the cost of a solution like this.

Multipathing In Networking

I have been a proponent of multipathing in networking for many years. Achieving higher interface speeds is getting more difficult and expensive. Transmitting data over multiple parallel paths is a possible solution. This is already done at the link layer. 40G and 100G are transmitted as 4x10G and 4x25G. But we can also do this at the transport layer with e.g. MPTCP. Apple has been using MPTCP for many years, mostly to “roam” between 4G and Wifi. You can start your session over Wifi at the office and when going out the session is seamlessly taken over by 4G. But MPTCP can also be used to load balance traffic over multiple paths and benefit from increased resiliency too. We have seen this kind of parallelism also in storage (RAID) and CPUs (multi-core).